Hey there!

Hi, I am Yash. I am a research fellow at the Air Traffic Management Research Institute (ATMRI), Nanyang Technological University (NTU), Singapore. Here, I work at the intersection of machine learning, optimization, and air traffic control, to address the near future air traffic demands.

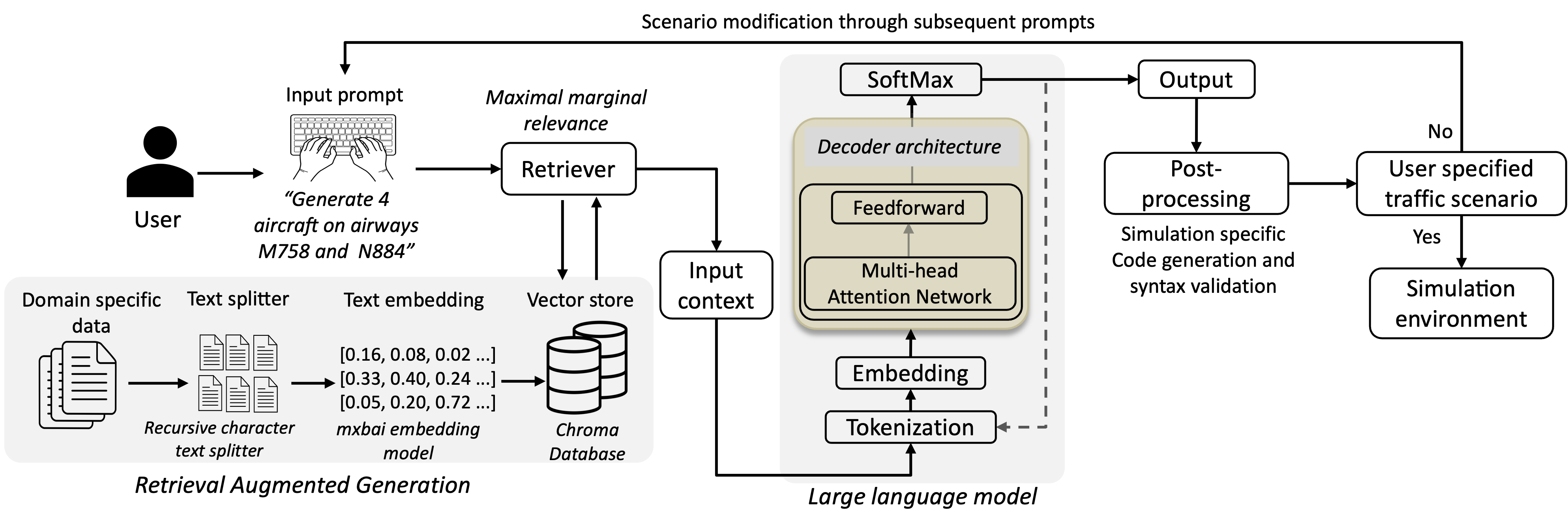

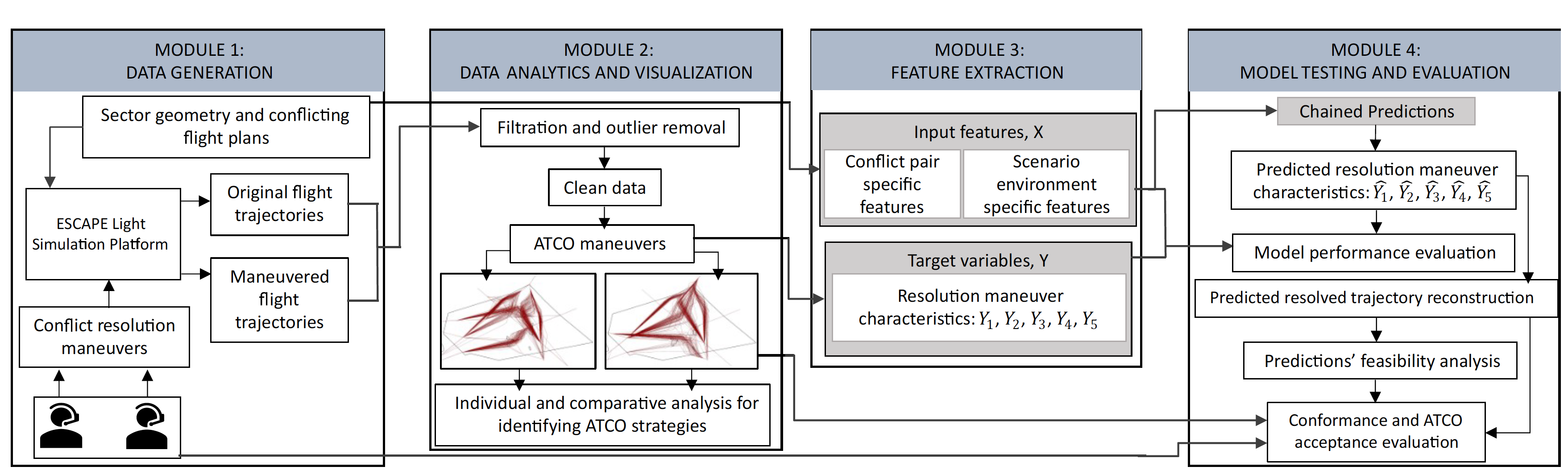

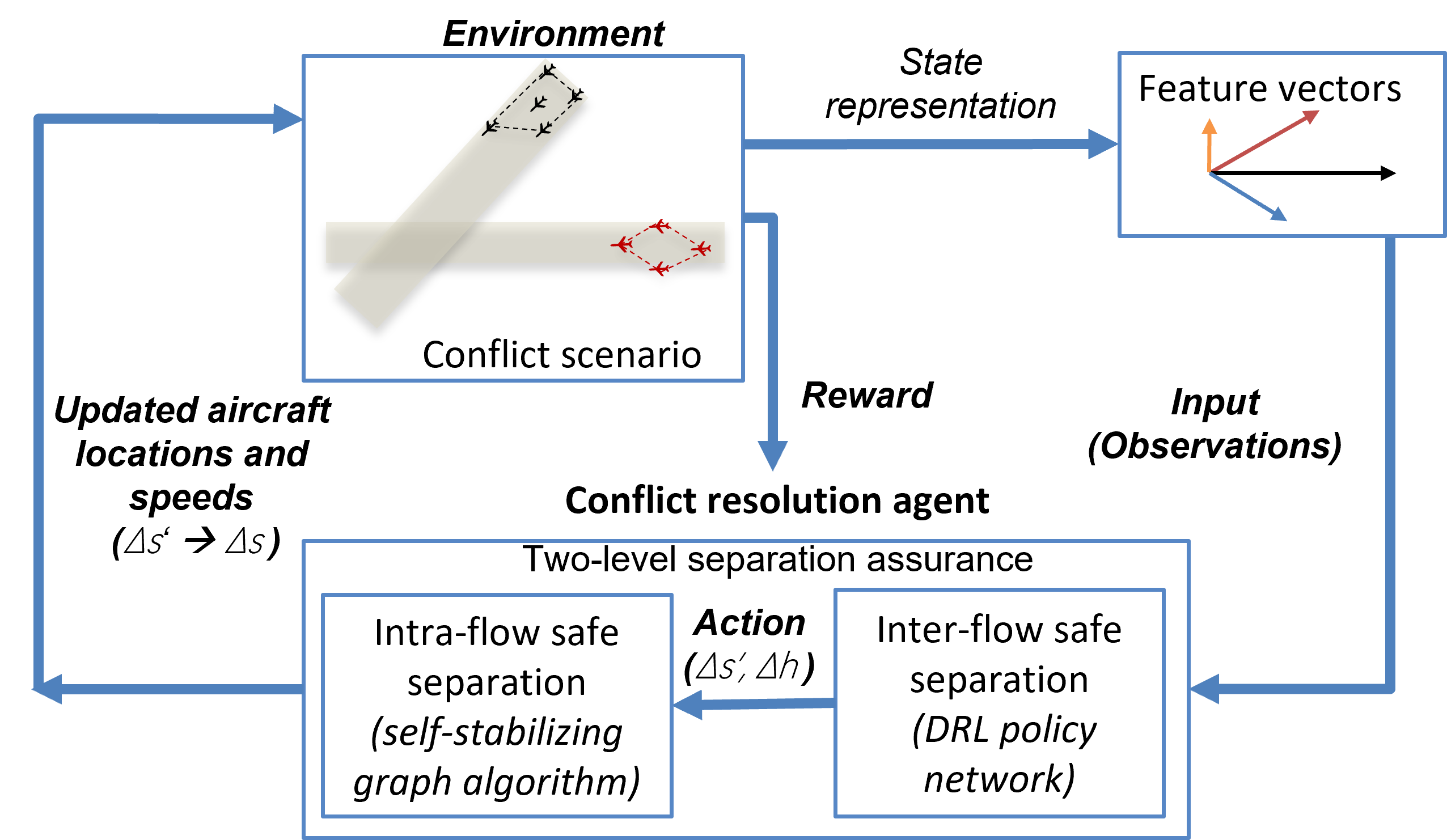

Currently, I am leading a team of 3 scientists to utilize large language models to generate safety-critical scenarios in the en-route phase of the aircraft. The primary motivation for this work stems from the scarcity of conflict scenarios in the historical data and the complexity and iterations involved in creating such scenarios, and the difficulty in customization and interactive enhancement of the traffic scenarios using traditional techniques. I received my Ph.D. in 2024 , with a focus of devloping machine learning models for air traffic conflict resolution for increased acceptance by the air traffic controllers.